scrapy 集成 selenium 可以达到动态的抓取网页

安装自动化测试工具

pip install -i https://pypi.douban.com/simple/ selenium

下载谷歌驱动器,并把她放到谷歌安装路径下:

C:Program Files (x86)GoogleChromeApplication

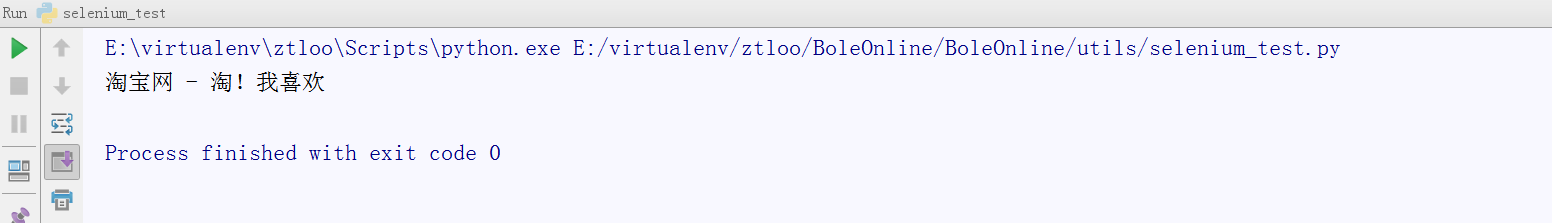

测试代码

# coding = utf-8

from selenium import webdriver

driver = webdriver.Chrome(executable_path="C:/Program Files (x86)/Google/Chrome/Application/chromedriver.exe")

driver.get('https://www.taobao.com')

print(driver.title)

设置chromeDriver 不加载图片、可以加速请求

chrome_options = webdriver.ChromeOptions()

prefs = {"profile.managed_default_content_settings.images":2}

chrome_options.add_experimental_option("prefs",prefs)

driver = webdriver.Chrome(executable_path="C:/Program Files (x86)/Google/Chrome/Application/chromedriver.exe",chrome_options=chrome_options)

PhantomJs ,是一个无界面的浏览器,效率高

多进程的情况下性能会下降

driver = webdriver.PhantomJS(executable_path="E:/virtualenv/phantomjs-2.1.1-windows/bin/phantomjs.exe")

driver.get('https://www.taobao.com')

print(driver.title)

Scrapy设置类

DOWNLOADER_MIDDLEWARES = {

'BoleOnline.middlewares.JSPageMiddleware': 1,

'BoleOnline.middlewares.RandomUserAgentMiddlware': 543,

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware': None,

# 'BoleOnline.middlewares.RandomProxyMiddleware': 400,

}

Scrapy 中间件

from selenium import webdriver

from scrapy.http import HtmlResponse

class JSPageMiddleware(object):

#通过chrome请求动态网页

def process_request(self, request, spider):

if spider.name == "jobbole":

spider.browser.get(request.url)

import time

time.sleep(3)

print ("访问:{0}".format(request.url))

return HtmlResponse(url=spider.browser.current_url, body=spider.browser.page_source, encoding="utf-8", request=request)

Scrapy 业务逻辑提取类:jobbole.py

from selenium import webdriver

from scrapy.xlib.pydispatch import dispatcher

from scrapy import signals

def __init__(self):

self.browser = webdriver.Chrome(executable_path="C:/Program Files (x86)/Google/Chrome/Application/chromedriver.exe")

super(JobboleSpider, self).__init__()

dispatcher.connect(self.spider_closed, signals.spider_closed)

def spider_closed(self, spider):

#当爬虫退出的时候关闭chrome

print ("spider closed")

self.browser.quit()

scrapy的暂停与重启

1.scrapy crawl spider lagou -s JOBDIR= job_info/001 将暂停时信息保存到001 (-s是-set的意思)

2.不同的spider需要不同的目录

3.可以在 settings 和 custome_setting 中设置 JOBDIR= job_info/001

4.ctrl-c 后就会将暂停信息保存到001 要想重新开始则 再次运行 scrapy crawl spider lagou -s JOBDIR= job_info/001 然后会继续爬取没有做完的东西

5.linux中暂停爬虫 kill-f -9 main.py -9为强制关闭的命令

6.scrapy自动限速 AUTOTHROTTLE 等设置打开

7.自定义spider的settings custom_settings = {"COOKIES_ENABLED":False}

8.telnet localhost 6023 est() 之前必须打开自己的talnet客户端

同乐学堂

同乐学堂

微信扫一扫,打赏作者吧~

微信扫一扫,打赏作者吧~